When you have hundreds to thousands of creators representing your brand on social media in their own authentic words, you need to learn to relinquish some control. So how do you ensure that those creators represent your brand well, while also allowing them artistic freedom?

“Brand safety” used to mean just trying to filter out NSFW content and bad language, but just like the rest of the influencer marketing industry, brand safety practices need to evolve with the times. Some brands can’t afford to avoid politics. Some brands want to take a stance on social issues. Some brands are embracing cannabis. Every company will have different brand safety standards, and it’s important that your creator partners know where your brand stands on different issues and topics.

Riskier, Less Risky, and the “Gray Middle”

Creator background vetting and ongoing monitoring is an essential component of any influencer campaign strategy, but there are nuances that need to be accounted for to best ensure brand safety. The first step is to determine what you consider to be an actual risk to your brand by establishing your comfort level with various topics.

What do you consider to be unquestionably risky content? Flag those items upfront and ensure your team knows that you do not want to work with influencers who have posted content pertaining to these issues. This might include:

- Heated political commentary

- Conspiracy theories

- Racist rhetoric

Then consider the other side of the coin: What is less risky? These are all things that you’ve decided don’t constitute a crisis if one of your creators posts about them, such as:

- Showing support for specific communities

- Fundraising

- Participating in cause-based activities

- Promoting sustainability

The tricky part is identifying what fits into the “gray middle” area between these two ends of your brand safety spectrum. This could include:

- Memes or jokes that are open to misinterpretation

- Posting PSA info about COVID that possibly hasn’t been vetted

- Posting about government leaders or organizations

- Political or religious content that differs from your beliefs but is not harmful

Once you’ve established the topics that fit into your “gray middle,” this is the space that you’re going to have to watch most closely because those conversations can turn at any moment. The key is to be an active participant in your brand safety monitoring and to evolve your vetting terms regularly.

Keywords are the Key

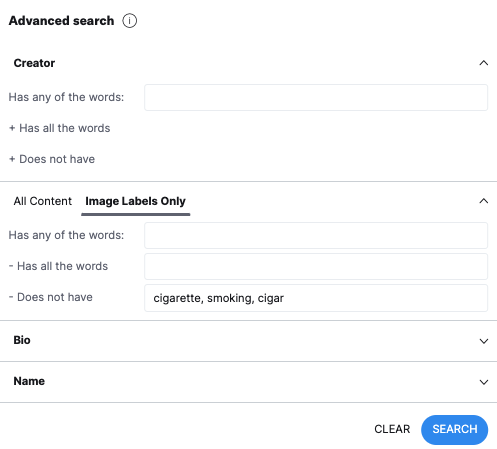

The CreatorIQ platform enables this hands-on approach by providing unparalleled keyword search capabilities. Users can search for a multitude of tagged terms to help filter out creators who are posting content that conflicts with their risk schema.

As an example, let's say your brand would rather work with creators who have not posted content related to smoking or cigarettes. You can filter though text like captions and hashtags, but also, thanks to CreatorIQ's machine learning technology, you can filter content through image recognition.

Addressing Risk

Communicating openly about campaign and creative briefs can help to mitigate risk before your creator partners even start posting on your brand’s behalf. Sharing overall content guidelines to inform the relationship will help a creator to understand your brand’s risk spectrum, and you can make it a collaborative process by asking them for input based on their experiences with their audiences. As part of these conversations, you might remind creators to:

- Check (and double-check) sources for accuracy

- Gut-check all content for moral and ethical inconsistencies

- Avoid using certain words and phrases, or focus on certain key messages (while also acknowledging that beyond your guidelines, a properly vetted creator should have the creative freedom to produce authentic content tailored to their audiences)

Another way to mitigate concerns about future risky behavior upfront is during the contracting phase. When building your creator contracts, you can include morality clauses or other accountability-related language, such as specific words and phrases to avoid, into those documents from the outset to help inform the terms of the relationship.

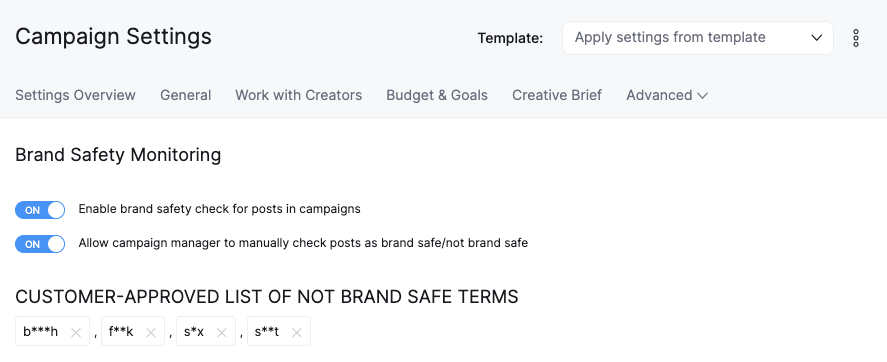

Once your campaign launches, CreatorIQ offers ongoing content monitoring features designed to flag common concerns and brand safety risks at scale without any need to comb through individual posts to identify trigger words or other concerns. The client can set up custom trigger words that flag content when a creator posts on on behalf of a brand. These trigger words can include NSFW content or any words and phrases that are explicitly prohibited.

What if a creator has been posting on behalf of your brand for a few months when suddenly they post something that toes the line between your “gray middle” and other more risky zones? What do you do?

Start with empathy and let that guide your approach to the situation. If you discover a risky post from a creator who you have worked with in the past or are currently working with, consider how you want to address the concern with them as it relates to the strength of your partnership:

- If a deep, presently beneficial partnership exists, reach out with transparency and honesty, explaining the situation and find a solution that works for all

- If the relationship is limited or new, consider parting ways on fair terms. Again, explain the concern with transparency and honesty, and make sure the creator is appropriately compensated for any work completed to date

- If you aren’t yet working with the creator and there is no relationship, simply reconsider engaging that creator

Don’t Set it and Forget it

There’s no one way to guarantee brand safety--you can’t just set it and forget it. By leveraging the tools at your disposal to mitigate risk on an ongoing basis, and continuing to revisit your filters and vetting as new conversations arise in the public space, you’ll be able to build stronger relationships with your creator partners over time--and you can depend on that trust to keep your brand safe.